関連ワード:

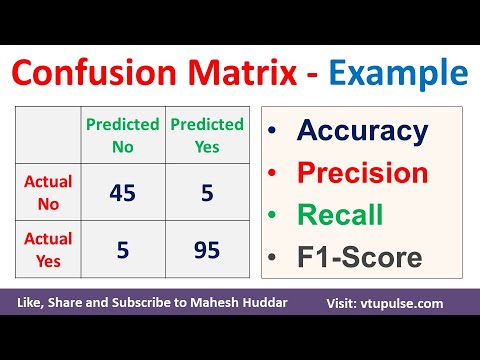

how to calculate model precision how to calculate model accuracy how to calculate model accuracy in python how to measure model accuracy how to find model accuracy how to determine model accuracy how to calculate predictive model accuracy how to calculate precision how to calculate precision in statistics how to calculate the relative precision